We had an overwhelming response to our call for universities and FE/skills providers to help pilot the Digital Experience Tracker – over 50 institutions completed the planning sheet and applied to take part.

![]() One outcome is that we have extended the number of institutions we are working with to more than twenty: 12 Universities, 10 FE and Skills providers and 2 Specialist Colleges. These institutions are currently piloting the Tracker survey with their learners. The surveys will close on 29th April, after which we will be working with the pilot sites to:

One outcome is that we have extended the number of institutions we are working with to more than twenty: 12 Universities, 10 FE and Skills providers and 2 Specialist Colleges. These institutions are currently piloting the Tracker survey with their learners. The surveys will close on 29th April, after which we will be working with the pilot sites to:

- help them understand their findings in relation to their sector overall

- help them respond to the findings, working in partnership with learners

- explore their experience of using the Tracker as a pilot service

- consult about whether or not to roll out the Tracker as a full service, and if so how we can improve it further

We will also be looking to see what we can learn from the overall findings about students’ experiences of digital technology across the sectors.

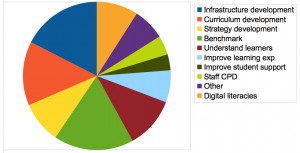

As part of this sector-wide learning, I have done a first analysis of the planning sheet returns. These are the responses we recorded from the institutions that applied to be part of the pilot (big thanks if you were one!). We will be sharing the detail with the institutions involved, but the highlights make interesting reading, especially coded responses to the question ‘What is the main reason for wanting to run the Tracker?‘

In FE (17 respondents) the reasons given in descending order of frequency were:

- Inform development of digital infrastructure, systems and environment (11)

- Inform development of digital curriculum (mainly blended learning) (9)

- Establish a benchmark/baseline for comparison (often further specified e.g. in relation to the curriculum or student satisfaction) (7)

- Inform development of digital strategies (7 – mainly ILT and e-learning )

In HE (33 respondents) there was considerable overlap but also some suggestive differences:

- Establish a benchmark/baseline for comparison (often further specified e.g. in relation to the curriculum or student satisfaction) (18)

- Inform development of digital infrastructure, systems and environment (14)

- Understand learner perspective/engage with learners around their digital experience (12) – with particular interest in understanding ‘what technologies learners are using‘ and how

- Inform development of digital curriculum (also learning and teaching) (11)

(Note that the count is the number of respondents giving at least one response that was coded in this way. Respondents could give as many responses as they wanted, but if they gave two responses that were coded in the same way this still only counted once. Responses from two specialist providers were not coded as they could not be considered representative, and with such a small number it might be possible to identify them.)

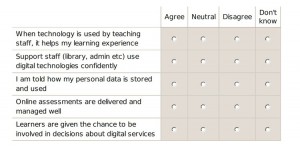

You can see a more detailed break down in this chart. An interesting finding for me – confirmed by several emails and comments – is the number of people who want to use the Tracker to assess learners’ digital literacies or capabilities. In fact, as we have tried to clarify, this is not its purpose. Jisc is doing other work on digital capabilities, currently focused on building a staff tool but with the potential to develop a learner-facing tool later in the process. It is encouraging to know that there is so much enthusiasm for these developments, but important to manage expectations of the Tracker. A bit like other national surveys – though with a digital focus – the Tracker is intended to gain a picture of how learners feel about their experience overall, and to compare relatively large student groups.

So if we ask ‘why has it been so popular?’, the answer seems to be that the opportunity to find out more about learners’ digital experiences supports a number of different agendas: strategy development, curriculum and learning/teaching enhancement, making sure investments in infrastructure are well targeted, and broader national agendas such as FELTAG. There is a growing recognition that digital factors are important in learners’ overall experience. Pragmatically, we all want to be more efficient about the data we collect – especially through surveys – and ensure that we are asking the right questions. Participants see the Tracker as a chance to use a well-designed and tested question set, in an established format, that supports benchmarking with others in their sector, and baselining as they improve their own learners’ digital experience.

So if we ask ‘why has it been so popular?’, the answer seems to be that the opportunity to find out more about learners’ digital experiences supports a number of different agendas: strategy development, curriculum and learning/teaching enhancement, making sure investments in infrastructure are well targeted, and broader national agendas such as FELTAG. There is a growing recognition that digital factors are important in learners’ overall experience. Pragmatically, we all want to be more efficient about the data we collect – especially through surveys – and ensure that we are asking the right questions. Participants see the Tracker as a chance to use a well-designed and tested question set, in an established format, that supports benchmarking with others in their sector, and baselining as they improve their own learners’ digital experience.

Read more about what the Tracker can do and follow developments of the Pilot project.