by Jay Dempster, JISC Evaluation Associate

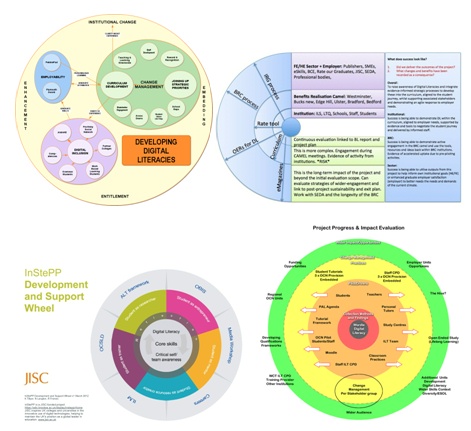

The JISC Developing Digital Literacies Programme team has been supporting its institutional projects to design and undertake a holistic evaluation. Projects are thinking critically about the nature, types and value of evidence as early indicators of change and several projects now have some useful visual representations of their project’s sphere of influence and evaluation strategy. A summary produced this month is now available from the JISC Design Studio at: http://bit.ly/designstudio-dlevaluation.

Structuring & mapping evaluation

The point is to reach a stage in designing the evaluation where we can clearly articulate and plan an integrated methodology that informs and drives a project towards its intended outcomes. Projects that have achieved a clearly structured evaluation strategy have:

- defined the purpose and outputs of each component;

- considered their stakeholder interests & involvement at each stage;

- identified (often in consultation with stakeholders) some early indicators of progress towards intended outcomes as well as potential measures of impact/success;

- selected appropriate methods/timing for gathering and analysing data;

- integrated ways to capture unintended/unexpected outcomes;

- identified opportunities to act upon emerging findings (e.g. report/consult/revise), as well as to disseminate final outcomes.

Iterative, adaptive methodologies for evaluation are not easy, yet are a good fit for the these kinds of complex change initiatives. Approaches projects are taking in developing digital literacies across institutions include:

- Adopting an action-learning approach within discipline-specific contexts

- Locating digital literacies within relevant local and national frameworks including a strong emphasis on employability

- Developing an ‘agile’ methodology

- Interventions based on empirical studies of what staff/students are doing in practice.

What is meant by ‘evidence’?

Building into the evaluation ways to capture evidence both from explicit, formal data-gathering activities with stakeholders and from informal, reflective practices on the project’s day-to-day activities can offer a continuous development & review cycle that is immensely beneficial to building an evidence base.

However, it can be unclear to projects what is meant by ‘evidence’ in the context of multi-directional interactions and diverse stakeholder interests. We have first considered who the evidence is aimed at and second, to clarify its specific value to them.

This is where evaluation can feed into dissemination, and vice versa, both being based upon an acute awareness of one’s target audience (direct and indirect beneficiaries/stakeholders) and leading to an appropriate and effective “message to market match” for dissemination.

In the recent evaluation support webinar for projects, we asked participants to consider the extent to which you can rehearse their ‘evidence interpretation’ BEFORE they collect it, for instance, by exploring:

– Who are your different stakeholders and what are they most likely to be interested in?

– What questions or concerns might they have?

– What form of information/evidence is most likely to suit their needs?

An evaluation reality-check

We prefaced this with an ‘evaluation reality-check’ questionnaire, which proved a useful tool both for projects’ self-reflection and for the support team to capture a snapshot of where projects are with an overall design for their evaluations. What can we learn from these collective strategies, how useful is the data that is being collected?

By projects sharing and discussing their evaluation strategies, we are developing a collective sense of how projects are identifying, using and refining their indicators and measures for the development of digital literacies in relation to intended aims . We are also conscious of the need to build in mechanisms for capturing unexpected outcomes.

Through representing evaluation designs visually and reflecting on useful indicators and measures of change, we are seeing greater clarity in how projects are implementing their evaluation plans. Working with the grain of those very processes they aim to support for developing digital literacies in their target groups, our intention is that:

- methods are realistic & reliable;

- questions are valid & relevant; and

- findings valuable & actionable by stakeholders.

One reply on “Crystallising Evaluation Designs – A Reality Check for Developing Digital Literacies”

[…] Source: elearning.jiscinvolve.org […]